2024年CKS考试准备

Start : 2024.1.15

DDL1:2024.2.3 15:00 (Rescheduled)

DDL2:2024.2.8 20:00 (Failed)

DDL3: 2024.2.23 23:30 (Success)

学习环境搭建

1 | Other prerequisites |

There may be lots of impediments to setting up this kubernetes cluster successfully due to network conditions or some misconfigurations, but those above can be solved step by step. Finally, node(s) is(are) ready as follows:

1 | kubectl get node |

做题工具

alias

1 | alias k=kubectl # will already be pre-configured |

vim

1 | set tabstop=2 |

jsonpath

| Function | Description | Example | Result |

|---|---|---|---|

text |

the plain text | kind is {.kind} |

kind is List |

@ |

the current object | {@} |

the same as input |

. or [] |

child operator | {.kind}, {['kind']} or {['name\.type']} |

List |

.. |

recursive descent | {..name} |

127.0.0.1 127.0.0.2 myself e2e |

* |

wildcard. Get all objects | {.items[*].metadata.name} |

[127.0.0.1 127.0.0.2] |

[start:end:step] |

subscript operator | {.users[0].name} |

myself |

[,] |

union operator | {.items[*]['metadata.name', 'status.capacity']} |

127.0.0.1 127.0.0.2 map[cpu:4] map[cpu:8] |

?() |

filter | {.users[?(@.name=="e2e")].user.password} |

secret |

range, end |

iterate list | {range .items[*]}[{.metadata.name}, {.status.capacity}] {end} |

[127.0.0.1, map[cpu:4]] [127.0.0.2, map[cpu:8]] |

'' |

quote interpreted string | {range .items[*]}{.metadata.name}{'\t'}{end} |

127.0.0.1 127.0.0.2 |

\ |

escape termination character | {.items[0].metadata.labels.kubernetes\.io/hostname} |

127.0.0.1 |

Examples using kubectl and JSONPath expressions:

1 | kubectl get pods -o json |

yq

examples

1 | Read a value |

- jq

- tr

- truncate

- crictl

- cut

awk

常规使用

组装命令并执行

1 | kubectl get svc | awk '{cmd="kubectl get svc "$1" -oyaml";system(cmd)}' |

- sed

- sha512sum

- podman(to build image)

日志查看

https://kubernetes.io/docs/concepts/cluster-administration/logging/#system-component-logs

对 kubelet 组件:

journalctl -xefu kubelet对以容器形式启动的 kubernetes 组件:在

/var/log/pods下(当把kube-apiserver的yaml弄坏起不来之后,应该可以在这个目录下查看启动失败的原因)

group缩写问题

group为空时表示core group,此时的 gv 缩写只有 v,即

1 | kubectl api-resources --api-group='' |

常见的控制器资源基本属于apps group

1 | NAME SHORTNAMES APIVERSION NAMESPACED KIND |

常见的几种需要填充group的地方

rbac

role.rules.apiGroups只需要填写groupaudit policy

rules.resources.group只需要填写group

做题方法论

客观局限

- 网络卡顿,导致做题时及其不流畅;

- 题量大,总共有

16道题,需要在120分钟内完成,完成一道题的平局时间应该120/16=7分钟;

主观局限

- 对安全相关的操作不熟练;

- 无做题策略,选择按顺序,从头做到尾;

- 开始做题前,未对该题进行自我评估,不确定能否短时间内搞定,做了一半,发现搞不定,非常浪费时间;

改进措施

- 改用香港/澳门移动网络漫游来做题(如果这次还是考不过,有网络卡顿的原因,下次得肉身跑到香港去了23333);

- 及格分数需要

67,粗略估计取得证书,需要做完67/(100/16)=11道题,可以允许5道题不做,但每题的平均用时为10分钟多一点。 - 做题步骤

- 花

1分钟浏览全题,理解题意,并评估是否有把握能完成; - 没把握的用flag标记,跳过,下一题;

- 花

- 优先去做的题目类型

- audit policy

- apparmor

- pod security standard

- runtime class

- image policy webhook

- trivy & kube-bench

- rbac & opa

- secret

- security context

主题

RBAC

Reference: https://kubernetes.io/docs/reference/access-authn-authz/rbac/

创建sa、role、rolebinding

1 | kubectl create sa shs-sa |

使用该ServiceAccount

1 | kubectl patch -p '{"spec":{"template":{"spec":{"serviceAccountName":"shs-saax","serviceAccount":"shs-saax"}}}}' deployment shs-dep |

Tips:

- 如果sa异常(如:不存在),则deployment的pod不会建出来,因为rs控制器已经检测到了异常,所以未建pod。

deploy.spec.template.spec.serviceAccount与deploy.spec.template.spec.serviceAccountName都需要修改。

NetworkPolicy

Pod Isolation

- Egress, outbound connection from pod, non-isolated by default. If NetworkPolicy selects this pod and was

Egresstype, then only out connections mentioned in it allowed. If lots of NetworkPolicy select the same pod, then all connections mentoined in those list are allowed. Additive. - Ingress, inbound connection to pod, non-isolated by default. Effects are as the same as Egress. Only connections mentioned by NetworkPolicy can connect to this Pod successfully.

Examples

1 | apiVersion: networking.k8s.io/v1 |

parameters of to and from was the same, as follows(irrelevant informations are omitted):

1 | kubectl explain networkpolicy.spec.ingress |

details of NetworkPolicyPeer are as follows:

1 | kubectl explain networkpolicy.spec.egress.to |

As for details of IPBlock and LabelSelector, just kubectl explain before coding yamls.

Tips

NetworkPolicywas namespaced, and only works in the namespace to which it belongs.NetworkPolicycan define only allowed rules.NetworkPolicyselects pod by labels only.

Default network policy

Deny all in & out bound traffics for a pod

1 | apiVersion: networking.k8s.io/v1 |

The OPA(Open Policy Agent) Gatekeeper

Ref: https://kubernetes.io/blog/2019/08/06/opa-gatekeeper-policy-and-governance-for-kubernetes

gatekeeper admission controller 拦截所有资源的创建、更新、删除请求,并针对相关资源,做所配置的校验。

定义校验模板

1 | apiVersion: templates.gatekeeper.sh/v1beta1 |

创建具体约束

每个命名空间都需要一个标签hr

1 | apiVersion: constraints.gatekeeper.sh/v1beta1 |

审计

Gatekeeper stores audit results as violations listed in the status field of the relevant Constraint.

1 | apiVersion: constraints.gatekeeper.sh/v1beta1 |

Apparmor

Confine programs or containers to limited set of resources, such as Linux capabilities, network access, file permissions, etc.

Works in 2 Modes

- enforcing, blocks access

- complain, only reports invalid access

Prerequisites

- works on kubernetes v1.4 +

- AppArmor kernel moduls enabled

- Container Runtime supports AppArmor

- Profile is loaded by kernel

Usage

Add annotations to pod which needed to be secured with key, name of container in Pod should be referred in key:

1 | container.apparmor.security.beta.kubernetes.io/<container_name>: <profile_ref> |

The profile_ref can be one of:

runtime/defaultto apply the runtime’s default profilelocalhost/<profile_name>to apply the profile loaded on the host with the name<profile_name>unconfinedto indicate that no profiles will be loaded

Works

- View Pod Events

kubectl exec <pod_name> -- cat /proc/1/attr/current

Helpful commands

- Show AppArmor Status

1 | apparmor_status |

- Load Profile to kernel

1 | apparmor_parser /etc/apparmor.d/nginx_apparmor |

Audit Policy

Reference: https://kubernetes.io/docs/reference/config-api/apiserver-audit.v1/#audit-k8s-io-v1-Policy

Stage

RequestReceived- Before handled by handler chainResponseStarted- After response header sent, but before response body sentResponseComplete- After response body sentPanic- After panic occurred.

Audit Level

None- don’t log events that match this ruleMetadata- log request metadata only(user, timestamp,resource,vert) but not request or response body.Request- log event metadata plus request bodyRequestResponse- log event metadata plus request, response bodies.

Example

1 | apiVersion: audit.k8s.io/v1 |

Configure it to kube-apiserver, see audit log.

Tips

If the Policy doesn’t work as expected, check kube-apiserver logs as below, make sure the Policy was loaded successfully. Since it seems to load a default AuditPolicy when failled to load the AuditPolicy passed in parameters of kube-apiserver. Logs are as below:

1 | W0122 16:00:29.139016 1 reader.go:81] Audit policy contains errors, falling back to lenient decoding: strict decoding error: unknown field "rules[0].resources[0].resource" |

Pod Security Standard

Reference

Policies

The Pod Security Standards define three different policies to broadly cover the security spectrum. These policies are cumulative and range from highly-permissive to highly-restrictive. This guide outlines the requirements of each policy.

3种策略,每种策略只是定义了检查、校验哪些字段、即校验范围。此3种策略,从上至下,校验范围依次增大。具体校验内容,可参考文档。

| Profile | Description |

|---|---|

| Privileged | Unrestricted policy, providing the widest possible level of permissions. This policy allows for known privilege escalations. |

| Baseline | Minimally restrictive policy which prevents known privilege escalations. Allows the default (minimally specified) Pod configuration. |

| Restricted | Heavily restricted policy, following current Pod hardening best practices. |

Levels

有3种针对不符合上述3种Policy的处理方式,即强制要求(否则拒绝创建)、记录到审计日志中、用户可见警告。

| Mode | Description |

|---|---|

| enforce | Policy violations will cause the pod to be rejected. |

| audit | Policy violations will trigger the addition of an audit annotation to the event recorded in the audit log, but are otherwise allowed. |

| warn | Policy violations will trigger a user-facing warning, but are otherwise allowed. |

Usage

在命名空间上打标签

1 | # The per-mode level label indicates which policy level to apply for the mode. |

SecurityContext

Reference: https://kubernetes.io/docs/tasks/configure-pod-container/security-context/

总共有两个安全配置的地方,位置分别为

pod.spec.securityContext属于PodSecurityContext这个结构体,表示pod中所有的容器都使用这个配置;pod.spec["initContainers","containers"].securityContext属于SecurityContext这个结构体,只限于当前容器使用此配置,且优先级高于上面的配置。

上述两种不同位置的安全配置中,有的字段是重复的,SecurityContext 的优先级更高。两者之间值的差异(都存在的字段已加粗):

| PodSecurityContext | SecurityContext |

|---|---|

| fsGroup | allowPrivilegeEscalation |

| fsGroupChangePolicy | capabilities |

| runAsGroup | privileged |

| runAsNonRoot | procMount |

| runAsUser | readOnlyRootFilesystem |

| seLinuxOptions | runAsGroup |

| seccompProfile | runAsNonRoot |

| supplementalGroups | runAsUser |

| sysctls | seLinuxOptions |

| windowsOptions | seccompProfile |

| windowsOptions |

按照如下要求修改 sec-ns 命名空间里的 Deployment secdep

一、用 ID 为 30000 的用户启动容器(设置用户 ID 为: 30000 runAsUser)

二、不允许进程获得超出其父进程的特权(禁止 allowPrivilegeEscalation)

三、以只读方式加载容器的根文件系统(对根文件的只读权限readOnlyRootFilesystem)

注意点:

readOnlyRootFilesystem和allowPrivilegeEscalation只存在于SecurityContext中,因此需要为各个容器都配置上,需注意容器数量,避免漏配;runAsUser存在于PodSecurityContext和SecurityContext中,可只配PodSecurityContext

RuntimeClass

Reference: https://kubernetes.io/docs/concepts/containers/runtime-class/

- Create

RuntimeClass - Specify created

RuntimeClassinpod.spec.runtimeClassName

Secret

Reference: https://kubernetes.io/docs/concepts/configuration/secret

- Secret Type

- Mount to a pod

练手速【来源】

在 namespace

istio-system中获取名为db1-test的现有 secret 的内容。将 username 字段存储在名为/cks/sec/user.txt的文件中,并将 password 字段存储在名为/cks/sec/pass.txt的文件中。注意:你必须创建以上两个文件,他们还不存在。

注意:不要在以下步骤中使用/修改先前创建的文件,如果需要,可以创建新的临时文件。

在

istio-systemnamespace 中创建一个名为db2-test的新 secret,内容如下:

username :

production-instancepassword :

KvLftKgs4aVH

- 最后,创建一个新的 Pod,它可以通过卷访问 secret

db2-test

Pod 名称

secret-podNamespace

istio-system容器名

dev-container镜像

nginx卷名

secret-volume挂载路径

/etc/secret

ServiceAccount

Reference: https://kubernetes.io/docs/concepts/security/service-accounts/

- Prevent kubernetes from injecting credentials for a pod

1 | kubectl explain sa.automountServiceAccountToken |

Set one of fields above to false to prevent auto injection for a pod.

Restrict access to Secrets

Set annotationkubernetes.io/enforce-mountable-secretstotruefor aServiceAccount, then only secrets in the field ofsa.secretsof this ServiceAccount was allowed to use in a pod, such as a secretvolume,envFrom,imagePullSecrets.How to use ServiceAccount to connect to apiserver? reference

1

2

3curl --cacert /var/run/secrets/kubernetes.io/serviceaccount/ca.crt --header "Authorization: Bearer $(cat /var/run/secrets/kubernetes.io/serviceaccount/token)" -X GET https://kubernetes.default.svc/api/v1/namespaces/default/secrets

or

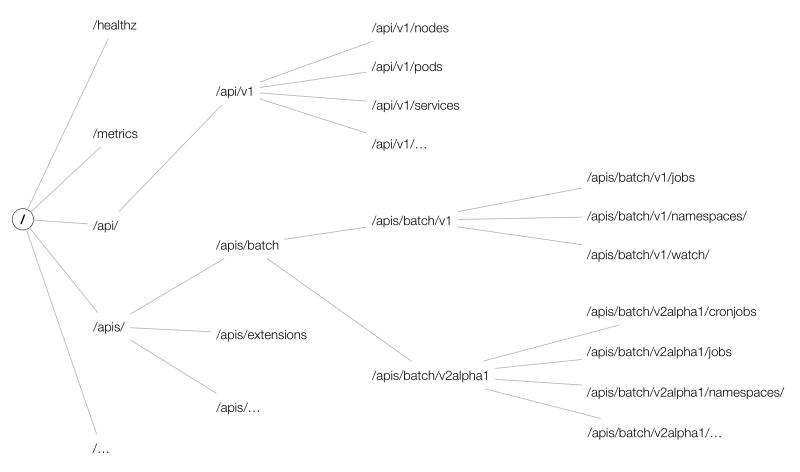

curl -k -XGET --header "Authorization: Bearer $(cat /var/run/secrets/kubernetes.io/serviceaccount/token)" https://kubernetes.default.svc/api/v1/namespaces/default/secrets整个kubeAPIServer提供了三类API Resource接口:

- core group:主要在

/api/v1下; - named groups:其 path 为

/apis/$GROUP/$VERSION; - 系统状态的一些 API:如

/metrics、/version等;

而API的URL大致以

/apis/{group}/{version}/namespaces/{namespace}/{resources}/{name}组成,结构如下图所示:

Tips:

在apiserver的URL中,资源需要用复数形式,如:

1

2curl -k -H "Authorization: Bearer $(cat /run/secrets/kubernetes.io/serviceaccount/token)" \

https://kubernetes.default.svc/api/v1/namespaces/default/pods/shs-dep-b56c568d6-n8h6d- core group:主要在

etcd

How to use etcdctl to get raw data from etcd?

1 | ETCDCTL_API=3 etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt \ |

Upgrade kubernetes version

Follow these steps:

for master

k drain controller-planeapt-mark unhold kubeadmapt-mark hold kubelet kubectlapt update && apt upgrade -ykubeadm upgrade plankubeadm upgrade apply v1.2x.xkubeadm upgrade plan(for check purpose)apt-mark hold kubeadmapt-mark unhold kubelet kubectlapt install kubectl=1.2x.x kubelet=1.2x.xapt-mark hold kubelet kubectlsystemctl restart kubeletsystemctl status kubeletk uncordon controller-plane

for node

k drain nodeapt updateapt-mark unhold kubeadmapt-mark hold kubectl kubeletapt install kubeadm=1.2x.xkubeadm upgrade plankubeadm upgrade nodeapt-mark hold kubeadmapt-mark unhold kubectl kubeletapt install kubectl=1.2x.x kubelet=1.2x.xsystemctl restart kubeletsystemctl status kubeletk uncordon kubelet

check upgrade result

k get node

ImagePolicyWebhook

https://kubernetes.io/docs/reference/access-authn-authz/admission-controllers/#imagepolicywebhook

安全工具使用

kube-bench

A tool to detect potential security issues and give the specific means to solve the issue.

Reference:

1 | Simple way in a kubernetes cluster created by kubeadm |

Contents

Consists of the following topics:

- master

- etcd

- controlplane

- node

- policies

Each topic starts with a list of items which was checked with checked status, then a list of remediations to FAIL or WARN items given. You can fix those issues under the given instructions. At last, check summary of this topic.

Here is a output example for topic master

1 | [WARN] 1.1.9 Ensure that the Container Network Interface file permissions are set to 600 or more restrictive (Manual) |

Full contexts can be touch by this link

trivy

Reference: https://github.com/aquasecurity/trivy

Scan a docker image

1 | trivy image --severity LOW,MEDIUM ghcr.io/feiyudev/shs:latest |

扫描某命名空间下所有pod所使用的镜像包含 HIGH, CRITICAL 类型漏洞,并删除该pod

1 | k get pod -A -ojsonpath="{range .items[*]}{.spec['initContainers','containers'][*].image} {.metadata.name} {'#'} {end}" | sed 's|#|\n|g' | sed 's|^ ||g' | sed 's| $||g' | awk '{cmd="echo "$2"; trivy -q image "$1" --severity HIGH,CRITICAL | grep Total";system(cmd)}' |

该命令的注意点:

jsonpathrangeawksystem(cmd)sedreplace

sysdig

Reference: https://github.com/draios/sysdig

Installation(Based on Ubuntu 22.04)

- Download

debfrom sysdig-release sudo dpkg -i sysdig-0.34.1-x86_64.debsudo apt -f install

Output format

1 | %evt.num %evt.outputtime %evt.cpu %proc.name (%thread.tid) %evt.dir %evt.type %evt.info |

Notes:

evt.diraka event direction, < represents out, > represents in.evt.typeaka event type, perceiving it as a name of system call.

Chisels

predefined function sets based on sysdig events, to implements complex situation. Locates in /usr/share/sysdig/chisels on Linux machine.

What are those chisels?

- To see chisels.

1 | sysdig -cl |

- To use a chisel

1 | See HTTP log |

- Advanced usage about a chisel

1 | sysdig -i spy_file |

Usage

- Save events to a file

1 | sysdig -w test.scap |

- Read events from a file while analyzing (by chisels)

1 | sysdig -r test.scap -c httptop |

- Specify the format to be used when printing the events

-p, –print=

Specify the format to be used when printing the events.

With -pc or -pcontainer will use a container-friendly format.

With -pk or -pkubernetes will use a kubernetes-friendly format.

With -pm or -pmesos will use a mesos-friendly format.

See the examples section below for more info.

1 | sysdig -r test.scap -c httptop -pc |

- Specify the number of events Sysdig should capture by passing it the -n flag. Once Sysdig captures the specified number of events, it’ll automatically exit:

1 | sysdig -n 5000 -w test.scap |

- Use the -C flag to configure Sysdig so that it breaks the capture into smaller files of a specified size.

The following example continuously saves events to files < 10MB:

1 | sysdig -C 10 -w test.scap |

- Specify the maximum number of files Sysdig should keep with the -W flag. For example, you can combine the -C and -W flags like so:

1 | sysdig -C 10 -W 4 -w test.scap |

- You can analyze the processes running in the WordPress container with:

1 | sysdig -pc -c topprocs_cpu container.name=wordpress-sysdig_wordpress_1 |

- -M

Stop collecting after reached.

Help

关于filter可用的字段,可以通过sysdig -l来查看所有支持的字段。例如查看容器相关的过滤字段,有:

1 | ubuntu@primary:~$ sysdig -l | grep "^container." |

可以看出,container.id只能取前12个字符,另外也可以用容器id的全名,即container.full_id。另外k8s可支持的字段有:

1 | ubuntu@primary:~$ sysdig -l | grep "^k8s." |

Traps

此处有坑

使用container.id过滤时,注意id的长度需要为12,不然数据出不来。通过crictl ps看到的container id是13位的,使用sysdig时,需要注意长度。

1 | ubuntu@primary:~$ crictl ps | grep -v ^C | awk '{print $1,$2,$6,$7}' |

falco

Reference: https://falco.org/docs

strace

监控进程的系统调用和信号量,基础的使用方式

- 监听某个已存在的进程

strace -p <pid> - 直接启动一个二进制

strace <binary-name> - 对输出结果进行过滤

strace -e trace=file

考试说明书

Requirments of your computer, microphone, camera, speaker, etc.

Don’t use headphone, earbuds.

- [PSI Bridge FAQ] System Requirements

- System Requirements to take the exam

- Browser recommand Google Chrome

Exam Details

Online tests, 15-20 performance-based tasks, 2 hours to complete the tasks.

Don’t cheat, audio,camera,screen capture of the test will be reviewed.

2024年CKS考试准备